Computers are slowly taking over jobs that humans used to do years ago. Self driving cars aren't the future, they're here now. It won't be long before taxis, trucks, or buses will soon be replaced by robots. The first autonomous trucks are already been driven around on highways in United States. It would not be a surprise if these changes are met with resistance. Perhaps laws will be passed restricting the use of automation in favor of actual people. As computers take over the job market, millions of people will be out of jobs with no place to work at. The lives of those in the future will certainly be different from world we live in now.

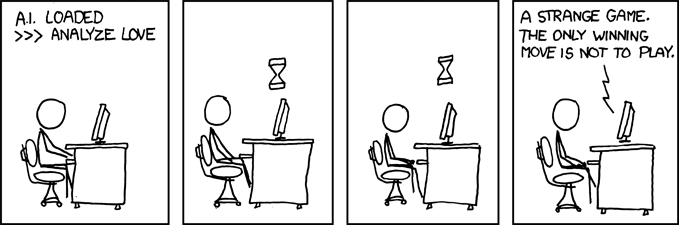

If the collapse of the economic system isn't bad enough, Bill Gates and Elon Musk along with hundreds of other computer scientists signed an open letter warning about the rise of super intelligent robots which pose a threat to the existence of humanity sometime in the future. However, all of this is just a speculation of the things to come and not everything will be as bleak as this. The things we find hard to solve now will be almost trivial to the technology we will have in the future. The rise of automation is inevitable and it's our job to make the best use of these things to come. If we are able to overcome these hurdles, the future will certainly be bright for us.

References

Coming Soon To A Highway Near You: A Semi Truck With A Brain. (2015, May 10). Retrieved May 11, 2015, from http://www.npr.org/blogs/alltechconsidered/2015/05/10/405598189/coming-soon-to-a-highway-near-you-a-semi-truck-with-a-brain

Readhead, H. (2015, January 29). Bill Gates joins Stephen Hawking and Elon Musk in warning of A.I. dangers. Retrieved May 11, 2015, from http://metro.co.uk/2015/01/29/bill-gates-joins-stephen-hawking-and-elon-musk-in-artificial-intelligence-warning-5041966/